Have you ever wondered how your phone automatically suggests the perfect reply to a text? Or how Netflix uncannily knows which movie you’ll want to watch next? If so, you’ve already had a conversation with Artificial Intelligence. AI is no longer a futuristic concept from science fiction; it’s a powerful technology woven into the fabric of our daily lives. But what is it, really? This comprehensive guide on AI for beginners is designed to lift the curtain. We will explore the fundamental ideas behind AI, break down how it actually works using simple analogies, discover the incredible applications you use every day, and map out a path for you to continue learning. I’m here to be your guide, translating the complex into the clear, and turning confusion into curiosity.

What is Artificial Intelligence Explained Simply? (Beyond the Sci-Fi)

When most people hear “Artificial Intelligence,” their minds often jump to images of sentient robots like C-3PO from Star Wars or the menacing HAL 9000 from 2001: A Space Odyssey. While these characters make for great storytelling, they don’t capture the reality of the AI that exists today. In my experience teaching this topic, the first and most important step is to ground our understanding in a practical, real-world definition.

At its core, Artificial Intelligence (AI) is the simulation of human intelligence processes by computer systems. These processes include learning (the acquisition of information and rules for using the information), reasoning (using rules to reach approximate or definite conclusions), and self-correction. Essentially, AI is a broad field of computer science dedicated to building smart machines capable of performing tasks that typically require human intelligence.

Read More: How AI is Reshaping SEO | 30+ Trends to Watch in 2026

Think of it less like building a conscious person and more like building a hyper-efficient, specialized intern. This intern can’t feel emotions or ponder the meaning of existence, but it can sift through millions of documents in seconds to find the one you need, identify patterns in complex data that no human could ever spot, and make highly accurate predictions based on past information.

The Core Goal: To Think, Learn, and Adapt

The ultimate ambition of AI research is to create systems that can not only perform tasks but can also learn and adapt as they do them. This is what truly separates AI from traditional computer programming. A traditional program follows a strict, pre-written set of “if-then” instructions. For example, “IF a user clicks ‘buy,’ THEN charge their credit card.” The program can’t deviate from these instructions.

An AI system, however, is designed to be flexible. It might be programmed with the goal of “increase sales,” but it learns how to achieve that goal on its own. It might analyze user behavior and discover that people who buy product A are 80% likely to also be interested in product C, so it starts recommending product C. If that strategy works, it reinforces it. If it doesn’t, it adapts and tries another. This ability to learn from outcomes and adjust its strategy is the magic of modern AI. The foundational idea for this dates back to the 1950s with pioneers like Alan Turing, who proposed the “Turing Test”—a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

Why is AI Exploding Now? The Trio of Power (Data, Algorithms, Computing)

If the ideas for AI have been around for over 70 years, why does it feel like it has exploded in just the last decade? The answer lies in a “perfect storm” of three key developments coming together:

- Big Data: AI systems, particularly Machine Learning models, are incredibly data-hungry. To learn how to identify a cat, an AI needs to see millions of pictures of cats. The internet and the digital age have created an unfathomable amount of data—text, images, videos, and sensor readings—that serves as the fuel for these systems. We are creating more data every day than we did in millennia before, providing the rich “textbooks” from which AI can learn.

To read AI related articles, visit: learn AI free

- Advanced Algorithms: Scientists and engineers have made monumental breakthroughs in developing more sophisticated algorithms. These are the mathematical recipes that process data, find patterns, and make decisions. Techniques like “deep learning” and “neural networks,” which we will explore shortly, are now more powerful and effective than ever before.

- Powerful Computing: Training an AI model on massive datasets requires immense computational power. In the past, this was prohibitively expensive and slow. The development of specialized processors, like Graphics Processing Units (GPUs), and the rise of cloud computing have made this power accessible and affordable. Companies and even individuals can now rent supercomputing power from services like Amazon Web Services (AWS) or Google Cloud, democratizing the ability to build and train powerful AI.

This convergence of massive data, smart algorithms, and accessible computing power has created the fertile ground for the AI revolution we are witnessing today.

How Does AI Work for Beginners? The Learning Machine

Understanding the inner workings of AI can seem daunting, filled with terms like “neural networks,” “algorithms,” and “backpropagation.” But the fundamental concept is surprisingly intuitive. Let’s strip away the jargon and use a simple, human analogy that I’ve found resonates perfectly with beginners: teaching a young child to recognize a cat.

Imagine you want to teach a toddler what a “cat” is. You wouldn’t write a list of rigid rules like “IF it has pointy ears AND whiskers AND a long tail AND fur, THEN it is a cat.” Why? Because some cats have folded ears, some have short tails, and a sphinx cat has no fur. This rule-based approach is too brittle.

Instead, you use a learning-based approach. You show the child hundreds, maybe thousands, of pictures of cats of all shapes, sizes, and colors, and for each one, you say, “This is a cat.” You also show them pictures of dogs, rabbits, and squirrels, saying, “This is not a cat.”

Over time, the child’s brain subconsciously starts to identify the complex patterns and features that define “cat-ness.” They form an abstract concept, a mental model, of what a cat is. Eventually, you can show them a picture of a cat they’ve never seen before, and they will correctly identify it. They have learned.

This is almost exactly how modern AI, specifically Machine Learning, works.

The Fuel: It All Starts with Data

In our analogy, the pictures of cats and non-cats are the data. For an AI, this is the most critical ingredient. Without high-quality, abundant, and well-labeled data, an AI cannot learn anything useful. This data can be almost anything:

- Images: Millions of photos for facial recognition or medical scan analysis.

- Text: The entire internet to train a language model like ChatGPT.

- Numbers: Years of stock market data to predict future trends.

- Sound: Thousands of hours of speech to train a voice assistant like Alexa.

The quality of this data is paramount. If we only showed the child pictures of black cats, they might mistakenly think a white cat is not a cat. This is a simple example of bias, a major challenge in AI that we’ll discuss later.

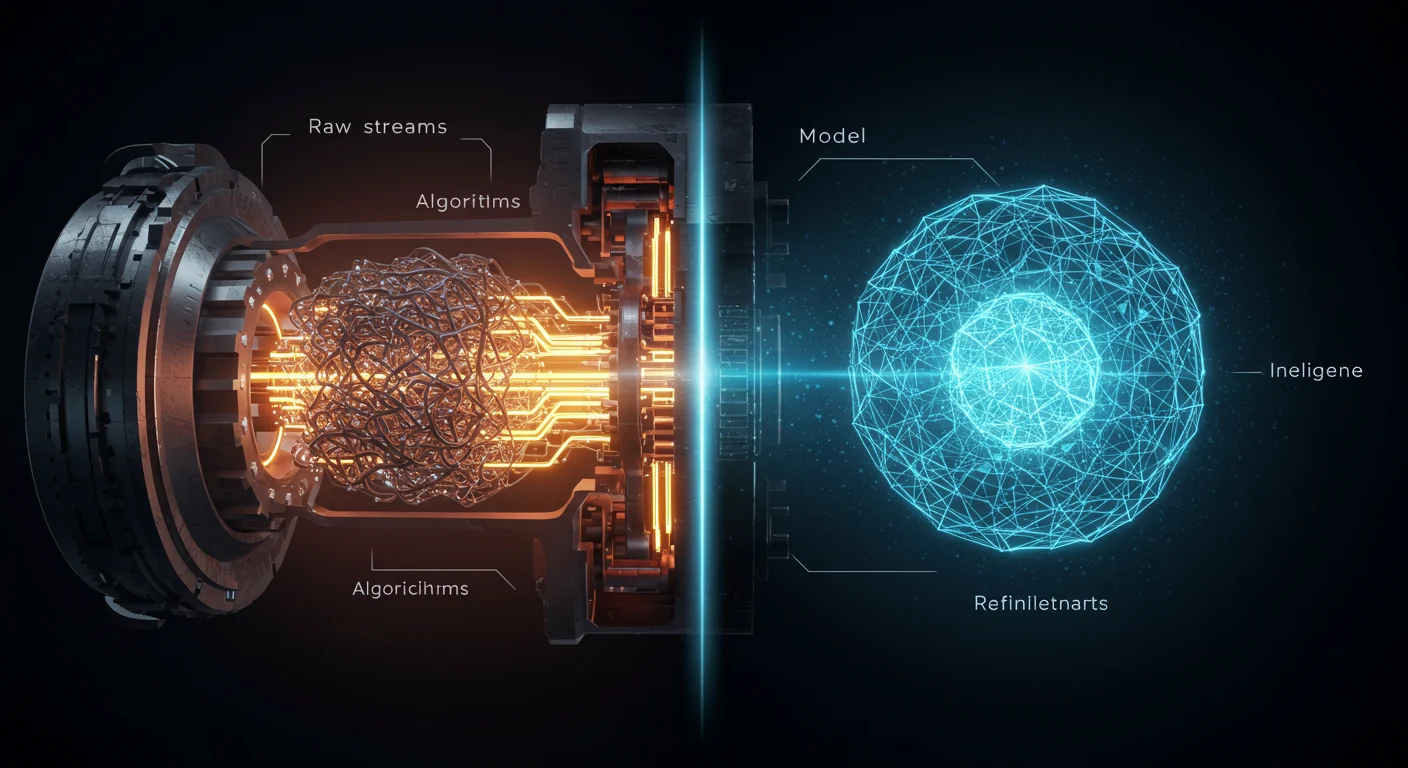

The Engine: Algorithms and Models

The algorithm is the learning process itself—the method the machine uses to analyze the data and find patterns. The model is the output of that process; it’s the “brain” or the collection of patterns that the AI has learned.

Going back to our analogy, the child’s brain uses a learning algorithm (a biological one, in their case) to create their mental model of a cat. An AI system uses a mathematical algorithm to create a digital model. This model isn’t a simple list of rules but a complex web of interconnected mathematical weights and variables that collectively represent the concept of “cat.”

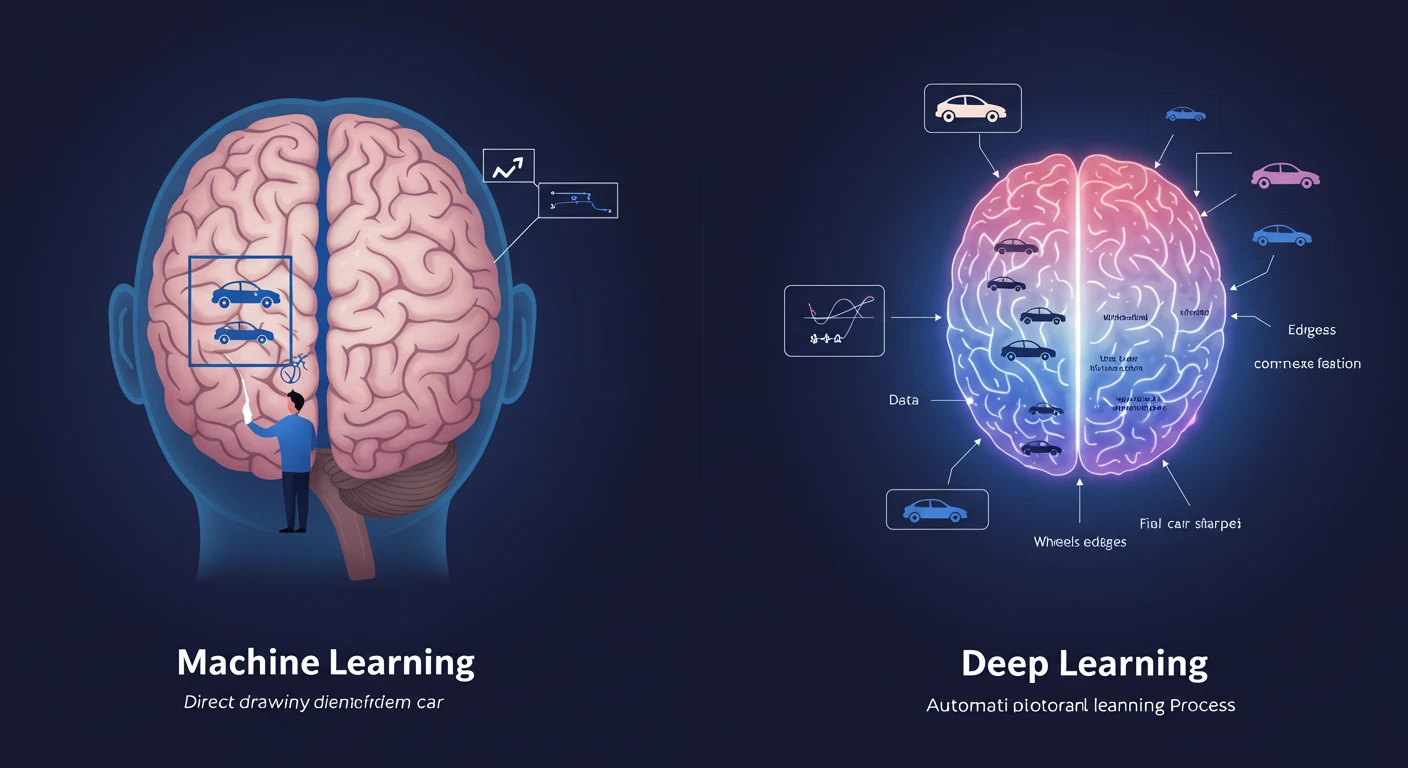

Machine Learning vs Deep Learning: A Simple Explanation

You will often hear the terms “AI,” “Machine Learning,” and “Deep Learning” used interchangeably, but they have distinct meanings.

- Artificial Intelligence (AI) is the broadest concept—the entire field of making machines smart.

- Machine Learning (ML) is a subset of AI. It is the most common technique used to achieve AI today. ML is focused specifically on systems that learn and improve from experience (data) without being explicitly programmed. Our cat analogy is a perfect example of Machine Learning.

- Deep Learning (DL) is a specialized subset of Machine Learning. It’s an even more advanced technique that uses complex, multi-layered structures called “artificial neural networks.”

If Machine Learning is like teaching the child, Deep Learning is like understanding how the child’s brain is physically structured to enable that learning. Deep Learning networks are inspired by the human brain, with many layers of interconnected “neurons” (simple processing nodes). Each layer learns to recognize increasingly complex features.

For example, when looking at cat photos:

- Layer 1 might learn to recognize simple edges and colors.

- Layer 2 might combine those edges to recognize shapes like ears and eyes.

- Layer 3 might combine those shapes to recognize cat faces.

- The final layer combines all this information to declare, with a certain probability, “This is a cat.”

This layered approach makes Deep Learning incredibly powerful for complex pattern recognition tasks like understanding speech, translating languages, and driving cars. It’s the engine behind many of the most impressive AI breakthroughs of the last decade.

The Main Flavors: Types of AI for Beginners Explained

Not all AI is created equal. From the simple spam filter in your email to the grand, sci-fi vision of a self-aware machine, AI exists on a spectrum of capability and complexity. For a beginner, it’s crucial to understand the three primary classifications that experts use. This framework helps manage expectations and understand where the technology is today versus where it might go in the future.

H3: Artificial Narrow Intelligence (ANI): The Specialist AI We Use Daily

This is, by far, the most common—and currently, the only—form of AI in existence. Artificial Narrow Intelligence (ANI), also known as Weak AI, is an AI system that is designed and trained to perform a specific, narrow task. It operates within a pre-defined, limited context and cannot perform tasks outside of its single purpose.

However, “narrow” doesn’t mean “not powerful.” An ANI can be far more effective and efficient at its specific job than any human.

- Examples of ANI are everywhere:

- Google Search: It’s an AI designed for the narrow task of finding and ranking the most relevant information on the web. It can’t order you a pizza.

- Siri, Alexa, and Google Assistant: These are voice-activated ANIs designed to understand and respond to a specific range of spoken commands. They can set a timer or play a song, but they can’t have a deep, meaningful conversation about philosophy.

- Netflix’s Recommendation Engine: This is a sophisticated ANI that analyzes your viewing history and compares it to millions of other users to predict what you’ll enjoy. Its sole purpose is to keep you engaged on the platform.

- Spam Filters: They perform one job: analyzing incoming emails to determine if they are junk. They are incredibly good at this single task.

- AI in Chess or Go: A program like AlphaGo is a genius at the game of Go, capable of defeating the best human players, but it has no ability to even understand the rules of checkers.

Every real-world application of AI you interact with today is a form of ANI. It’s a collection of highly specialized digital tools.

H3: Artificial General Intelligence (AGI): The Human-Level AI of the Future

This is the type of AI that science fiction loves to portray. Artificial General Intelligence (AGI), or Strong AI, refers to a hypothetical machine with the ability to understand, learn, and apply its intelligence to solve any problem that a human being can.

An AGI would not be limited to a single task. It would possess consciousness, self-awareness, and the ability to transfer knowledge from one domain to another. For example, an AGI could read a book on quantum physics, understand it, then write a symphony inspired by its concepts, and then learn how to cook a gourmet meal. It would possess the same kind of flexible, general intelligence that defines humanity.

- Fictional Examples of AGI:

- Data from Star Trek: The Next Generation

- Samantha from the movie Her

- The Hosts from Westworld

It is critical to emphasize that AGI does not exist yet. Creating it is the holy grail for many AI researchers, but it remains a monumental scientific and philosophical challenge. We are still decades, if not longer, away from achieving this level of artificial intelligence.

H3: Artificial Superintelligence (ASI): The Theoretical Frontier

If AGI is the goal, Artificial Superintelligence (ASI) is the theoretical step beyond it. ASI is defined as an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom, and social skills.

The concept of ASI often brings up profound and sometimes unsettling questions. A super intelligent system could solve humanity’s biggest problems, like curing all diseases or ending climate change. However, thinkers like Nick Bostrom and the late Stephen Hawking have also warned of the potential existential risks. If a machine is vastly more intelligent than its creators, how can we ensure its goals remain aligned with ours?

ASI remains firmly in the realm of speculation. It’s a topic of intense debate among futurists and philosophers, but for now, it’s a distant possibility that builds upon the yet-unrealized dream of AGI. For any AI for beginners, the key takeaway is to focus on the powerful and transformative reality of ANI, while understanding AGI and ASI as the future horizons that drive the field forward.

Real-World AI Applications You’re Already Using

One of the most effective ways to grasp the scope of AI is to recognize how deeply it’s already integrated into your daily routines. AI isn’t just a lab experiment; it’s the invisible engine powering many of the services you rely on for convenience, entertainment, and security. Recognizing these real-world AI applications makes the entire topic more tangible and less abstract. Many people are surprised to learn they are expert users of AI systems without even realizing it.

In Your Pocket: Personal Assistants and Smart Replies

Your smartphone is a veritable Swiss Army knife of Artificial Narrow Intelligence.

- Voice Assistants (Siri, Google Assistant): When you say, “Hey Siri, what’s the weather today?” a complex AI process kicks in. First, Natural Language Processing (NLP) AI converts your speech into text. Then, another AI model analyzes the text to understand your intent (you want weather information). It retrieves the data and uses another NLP model to generate a human-like spoken response.

- Smart Replies & Autocorrect: In your messaging and email apps, AI predicts the word you’re typing or even suggests entire phrases. It learns from billions of examples of human conversations to offer contextually relevant replies like “Sounds good!” or “I’ll be there in 10 minutes.” This saves you time and is a direct application of predictive text AI.

- Photography: Modern smartphone cameras use AI to improve your photos. “Portrait Mode” uses AI to distinguish the person from the background and digitally blur the background to mimic a professional camera. AI also adjusts lighting, reduces noise in low-light shots, and even suggests the best frame.

On Your Screen: How Netflix and Spotify Know What You Like

Recommendation engines are one of the most commercially successful applications of AI.

- Netflix & YouTube: When you finish a show, the platform doesn’t just recommend something random. A powerful AI system analyzes dozens of factors: your viewing history, what shows you finish versus which ones you abandon, the genres you prefer, the actors you like, the time of day you watch, and even what other users with similar tastes have enjoyed. It then presents a personalized list designed to maximize your engagement. This “collaborative filtering” is a classic ML technique.

- Spotify & Apple Music: Similarly, music streaming services use AI to curate playlists like “Discover Weekly.” The AI analyzes your listening habits—the songs you play on repeat, the ones you skip, the artists you follow—and finds new music with similar audio characteristics (tempo, key, instrumentation) and listener profiles, introducing you to your next favorite band.

Behind the Scenes: Banking Fraud Detection and Medical Imaging

Some of the most critical AI applications work silently in the background to keep you safe and healthy.

- Financial Fraud Detection: Your bank uses AI to protect you from fraud. The AI models are trained on millions of transaction records to learn your normal spending behavior—where you shop, how much you typically spend, and when. If a transaction suddenly appears that deviates wildly from this pattern (e.g., a large purchase in a different country at 3 AM), the AI flags it as potentially fraudulent and can automatically block the transaction and alert you. This system saves consumers and banks billions of dollars annually.

- Medical Diagnosis: AI is revolutionizing healthcare. In radiology, AI models are being trained to analyze medical images like X-rays, MRIs, and CT scans. These models can detect signs of diseases like cancer or diabetic retinopathy with a level of accuracy that sometimes surpasses human radiologists. They act as a powerful “second opinion,” helping doctors make faster, more accurate diagnoses.

A New Frontier: AI in Creative Arts and Content Generation

Until recently, creativity was seen as a uniquely human domain. That is rapidly changing. A new field called Generative AI is pushing the boundaries of what machines can create.

- AI Art Generators (Midjourney, DALL-E 2): These tools allow you to type a simple text prompt—like “an astronaut riding a horse on Mars in the style of Van Gogh”—and the AI generates a stunning, original image in seconds. It has learned the relationship between words and visual concepts from billions of image-text pairs from the internet.

- AI Writing Assistants (ChatGPT, Jasper): These large language models (LLMs) can write emails, draft articles, generate marketing copy, and even write computer code based on a user’s prompt. They are transforming how content is created, acting as powerful brainstorming partners and productivity tools.

These examples are just the tip of the iceberg, but they illustrate a key point: AI is a versatile tool that is already enhancing our world in countless ways, from the mundane to the miraculous.

Getting Started with AI: Your Learning Path for 2025

Now that you have a solid grasp of what AI is, how it works, and where it shows up in your life, you might be feeling a spark of curiosity to learn more. The good news is that there has never been a better time for getting started with AI. The resources available today are more accessible and beginner-friendly than ever before. Whether you’re just curious or considering a future career in tech, here is a practical, step-by-step learning path you can follow. A common myth I encounter is that you need to be a math genius or an expert coder to begin. This simply isn’t true.

Step 1: Build Foundational Knowledge (No Coding Needed!)

Before you ever write a line of code, the most important step is to build a strong conceptual understanding. Your goal here is to become “AI literate”—to understand the language, the key ideas, and the societal implications.

- Read Reputable Blogs and Newsletters: Follow sources that specialize in explaining AI in plain English. Websites like The Verge, Wired, and MIT Technology Review have excellent AI sections. Newsletters like Ben’s Bites or The Neuron offer daily summaries of the latest developments.

- Watch YouTube Explainers: Channels like 3Blue1Brown (for visual math concepts), StatQuest with Josh Starmer (for statistics and machine learning), and Lex Fridman (for in-depth interviews with top researchers) are fantastic resources.

- Take an Introductory Online Course: Platforms like Coursera and edX offer free or low-cost introductory courses from top universities. Look for courses like Andrew Ng’s “AI For Everyone” on Coursera. It’s specifically designed for a non-technical audience and provides a brilliant overview of the business and societal aspects of AI.

Step 2: Play with Low-Code and No-Code AI Tools

The best way to learn is by doing. You can get hands-on experience with AI capabilities without writing any code. This is an empowering and fun way to see the technology in action.

- Experiment with Generative AI: Spend time with tools like ChatGPT (for text) and Microsoft Designer’s Image Creator (powered by DALL-E 3, for images). Give them creative prompts. Try to make them write a poem, plan a trip, or create a logo. This gives you an intuitive feel for the capabilities and limitations of large language models.

- Automate Tasks: Use tools like Zapier or Make.com. They now have AI integrations that let you do things like “Summarize incoming emails with AI and put the summary in a Google Sheet.” This shows you how AI can be used for practical productivity.

- Explore AI-Powered Creative Tools: If you’re interested in video or music, check out tools like RunwayML or Descript, which use AI for video editing (e.g., automatically removing backgrounds) and audio transcription.

Step 3: For the Ambitious: An Introduction to Python and Key Libraries

If you’ve completed the steps above and your curiosity is stronger than ever, you might be ready to dip your toes into the technical side. This is the path for those who think they might want to build AI applications themselves one day.

- Learn the Basics of Python: Python is the undisputed king of programming languages for AI and Machine Learning. Its syntax is relatively simple and readable, making it beginner-friendly. There are countless free resources like freeCodeCamp, Codecademy, and the book Automate the Boring Stuff with Python to get you started.

- Understand Key Libraries: You don’t build AI from scratch. You use powerful, pre-built libraries. The most important ones to know about are:

- NumPy & Pandas: For organizing and manipulating data.

- Scikit-learn: For beginner-friendly, traditional machine learning tasks.

- TensorFlow & PyTorch: For advanced deep learning and building neural networks.

You don’t need to master these overnight. The goal is to simply start a free “Intro to Python” course and learn what these tools are used for. This structured approach ensures you won’t get overwhelmed, building your knowledge from the conceptual to the practical and, finally, to the technical.

The Big Questions: Ethical Implications and the Future of AI

No discussion of AI for beginners would be complete without honestly addressing the significant ethical challenges and societal questions that accompany this powerful technology. As an expert in this field, I believe it’s our responsibility to be transparent and proactive about these issues. Building trustworthy AI requires us to confront its potential downsides, not ignore them. A truly intelligent approach to AI is one that is not only technologically sophisticated but also ethically conscious and human-centered.

The Problem of Bias: If You Teach AI Biased Data, You Get Biased Results

This is perhaps the most pressing ethical challenge in AI today. As we’ve learned, AI models learn from the data they are given. But what if that data reflects existing societal biases? The AI will learn, amplify, and perpetuate those biases at a massive scale. This is often summarized as “bias in, bias out.”

- A Real-World Case Study: In 2018, it was revealed that an experimental AI recruiting tool developed by a major tech company showed a significant bias against female candidates. The reason? The model was trained on the company’s hiring data from the previous decade, a period when most applicants and hires were male. The AI learned that being male was a predictor of success and penalized resumes that contained the word “women’s” (e.g., “captain of the women’s chess club”) and downgraded graduates from all-female colleges.

This example highlights a critical truth: AI is not inherently objective. It is a mirror that reflects the data we feed it, warts and all. Addressing this requires a concerted effort to audit datasets for bias, develop techniques for algorithmic fairness, and ensure diverse teams are building and testing these systems.

Privacy Concerns in a Data-Driven World

AI’s thirst for data creates a natural tension with our right to privacy. The more data an AI has about you, the more personalized and effective its services can be. Your Netflix recommendations are good because Netflix tracks everything you watch. Your navigation app knows the fastest route because it tracks the real-time location of thousands of other users.

This raises critical questions:

- How much of our data are we willing to trade for convenience?

- Who owns the data we generate?

- How is that data being protected from misuse or breaches?

Regulations like the GDPR in Europe and the CCPA in California are first steps toward giving individuals more control over their data, but the debate is far from over. A trustworthy AI ecosystem must be built on a foundation of transparency and user consent regarding data collection and usage.

The Future of Work: Augmentation, Not Just Automation

The fear that “robots will take all our jobs” is a common one. While it’s true that AI and automation will displace certain types of jobs—particularly those that are repetitive and predictable—the narrative of pure automation is incomplete. The more likely future is one of augmentation, where AI acts as a powerful collaborator that enhances human capabilities.

- Radiologists may use AI to pre-screen scans, allowing them to focus their expert attention on the most complex cases, leading to better patient outcomes.

- Lawyers can use AI to sift through millions of legal documents in minutes, freeing them up to focus on strategy and client interaction.

- Software developers are already using AI assistants to write code faster and with fewer bugs.

The challenge is not stopping technology, but adapting to it. This will require a societal focus on lifelong learning, reskilling, and upskilling. The jobs of the future will increasingly be those that leverage uniquely human skills: creativity, critical thinking, emotional intelligence, and complex problem-solving—using AI as a tool to amplify those skills.

Conclusion: Your Journey into AI Has Just Begun

We’ve traveled a long way, from demystifying the term “Artificial Intelligence” to understanding the core mechanics of how it learns. We’ve seen how ANI is a powerful specialist tool already woven into our lives, explored the future possibilities of AGI, and mapped out a clear path for anyone looking to get started with AI. We also faced the critical ethical questions that must guide its development.

The most important takeaway is this: AI is a tool. Like any powerful tool, its impact depends entirely on how we choose to build and use it. Understanding its basic concepts is no longer a niche technical skill; it’s becoming a fundamental form of modern literacy. You don’t need to become a data scientist to grasp the principles and participate in the conversation about its role in our world. Your journey into AI has just begun, and armed with this foundational knowledge, you are now equipped to follow its evolution with confidence and insight.

The best time to start learning was yesterday. The second-best time is now.

What application of AI excites or concerns you the most? Share your thoughts in the comments below!

Frequently Asked Questions (FAQ)

1. What is the simplest definition of AI? Artificial Intelligence (AI) is the field of computer science focused on creating machines that can perform tasks that typically require human intelligence, like learning, reasoning, and problem-solving.

2. Can I learn AI without knowing how to code? Absolutely. You can gain a deep understanding of AI concepts, applications, and ethics without writing any code. Courses like “AI For Everyone” and experimenting with no-code AI tools are great starting points.

3. What is the main difference between AI and Machine Learning? AI is the broad concept of creating intelligent machines. Machine Learning (ML) is the most common method used to achieve AI, where machines learn from data to improve their performance on a task.

4. Is Siri or Alexa considered AI? Yes, Siri and Alexa are excellent examples of Artificial Narrow Intelligence (ANI). They use AI for speech recognition, natural language processing, and task execution within a limited domain.

5. What are three real-world examples of AI? Three common examples are: 1) Recommendation engines on Netflix or Spotify, 2) Spam filters in your email inbox, and 3) Fraud detection systems used by your bank.

6. Is AI dangerous? The technology itself is not inherently dangerous, but it can be used for harmful purposes or have unintended negative consequences, such as perpetuating bias or compromising privacy. The ethical development and regulation of AI are crucial to mitigate these risks.

7. How long does it take to learn the basics of AI? You can learn the fundamental concepts, terminology, and real-world applications of AI in just a few weekends of dedicated reading and watching introductory videos. Becoming a technical practitioner, however, requires years of study.